The example linked above has an attribute class that works perfectly for this: DisableFormValueModelBindingAttribute.cs. DO prevent MVC from model-binding the request Chunking files is a separate technique for file uploads – and if you need some features such as the ability to pause and restart or retry partial uploads, chunking may be the way you need to go. It is not the same as file “chunking”, although the name sounds similar. It is important to note that although it has “multi-part” in the name, the multipart request does not mean that a single file will be sent in parts. By using the multipart form-data request you can also support sending additional data through the request. The multipart request (which can actually be for a single file) can be read with a MultipartReader that does NOT need to spool the body of the request into memory. I think the explanation in the swagger documentation is also really helpful to understand this type of request. Multipart (multipart/form-data) requests are a special type of request designed for sending streams, that can also support sending multiple files or pieces of data. You’ll see this in the file upload example. To do that, we are going to use several of the helpers and guidance from the MVC example on file uploads. The other piece we need is getting the file to Azure Blob Storage during the upload process. NET Core 3.0 but the pieces we need will work just fine with.

#BLOB STORAGE VS FILE STORAGE CODE#

At the time of this article, the latest version of the sample code available is for.

#BLOB STORAGE VS FILE STORAGE DOWNLOAD#

And just go ahead and download the whole example, because it has some of the pieces we need. In fact, if you are reading this article I highly recommend you read that entire document and the related example because it covers the large file vs small file differences and has a lot of great information. NET MVC, and it is here, in the last section about large files. There is one example in Microsoft’s documentation that covers this topic very well for. Your byte array will work fine for small files or light loading, but how long will you have to wait to put that large file into that byte array? And if there are multiple files? Just don’t do it, there is a better way. For the same reasons as above, we don’t want to do this. This one should be kind of obvious, because what does a memory stream do? Yes, read the file into memory. This is slow and it is wasteful if all we want to do is forward the data right on to Azure Blob Storage. But when it comes to files, any sort of model binding will try to…you guessed it, read the entire file into memory.

MVC is very good at model binding from the web request. Which is exactly what we don’t want to do with large files. If you let MVC try to bind to an IFormFile, it will attempt to spool the entire file into memory. Handling large file uploads is complex and before tackling it, you should see if you can offload that functionality to Azure Blob Storage entirely. You may be able to use client-side direct uploads if your architecture supports generating SAS (Shared Access Signature) upload Uris, and if you don’t need to process the upload through your API. NET MVC for uploading large files to Azure Blob Storage DON’T do it if you don’t have to

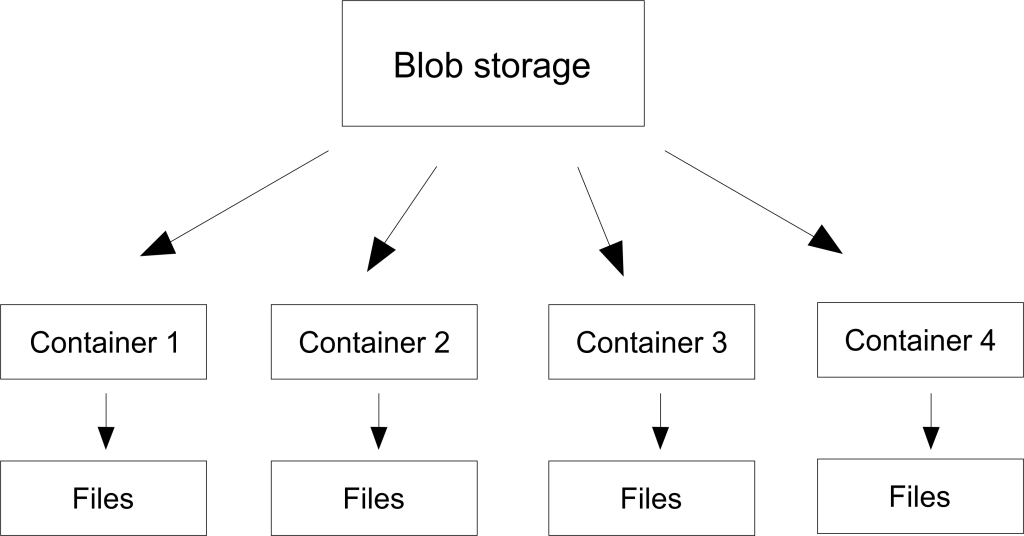

For larger file size situations, we need to be much more careful about how we process the file. These are fine for small files, but I wouldn’t recommend them for file sizes over 2MB. You’ll find a lot of file upload examples out there that use what I call the “small file” methods, such as IFormFile, or using a byte array, a memory stream buffer, etc. But if you want to let your users upload large files you will almost certainly want to do it using streams. With Azure Blob Storage, there multiple different ways to implement file uploads.

Implement file uploads in the wrong way, and you may end up with memory leaks, server slowdowns, out-of-memory errors, and worst of all unhappy users. What’s the big deal about file uploads? Well, the big deal is that it is a tricky operation.

0 kommentar(er)

0 kommentar(er)